Although modern technology is always evolving fast, the last few years were unlike any time in history. Its growth nudged into hyper-speed, giving us tech like functioning artificial intelligence (AI), realistic virtual reality (VR), compact augmented reality (AR), biotechnology, and more. But even though these are some of the most remarkable feats for humankind, the ethical concerns surrounding these modern tech products are also rising ferociously.

In this post, we’ll dive deep into the top ten ethical concerns surrounding modern technology in 2023 and their impact on our being.

So, let’s begin.

The Advancement in Modern Technology

Within the past three years, we’ve seen breakthroughs in artificial intelligence, quantum computing, virtual reality, and much more.

However, the ways in which some of these technologies function has also raised many ethical questions that we might not yet have answers to.

Let’s have a look.

1. Consumer Privacy

When we blindly accept the terms and conditions, in most cases, we agree to give companies permission to fetch and store much more information about us than we’d be intentionally willing to give away.

This helps them design algorithms that compel us to buy things we don’t need and engage in activities we normally wouldn’t engage in.

I’ve covered this in detail in my post, “Social Media Algorithms.” So, give it a read.

Also, while we’re on the topic of not reading terms and conditions, I wanted to introduce you to this amazing site called TOSDR, or Terms of Service Didn’t Read. This site summarizes the terms and conditions of any website in a chewable format. So, when you’re signing up for a new site, visit them first.

With the rise of the Internet of Things (IoT), data collection is going to be even more sophisticated. With the concept of smart homes making their way to society, experts fear that soon tech companies will know more about you as a person than even your loved ones.

2. Autonomous Technology

I doubt many people are unaware of autonomous technology in 2023. We have cars that drive themselves, drones that deliver packages with unprecedented precision, robots that can do human jobs, and more.

But some experts are worried that we’re trusting technology too much without understanding it properly.

For example, if a self-driving car hits someone, can you blame it? Now while it’s true that these are sophisticated machines that rarely make mistakes, we also know that machines malfunction.

So, can we be certain? How do we ensure that these machines are safe and won’t cause harm to people?

Besides safety, bias is also one of the things that experts are worried about. That’s because autonomous technology is only as unbiased as the people who design and program it. If these people have biases, this can be reflected in the technology. For example, if a facial recognition system is programmed with biased data, it may not work well for certain groups of people.

3. Computing’s Effect on The Environment

Today, around two-thirds of the global population uses a smartphone, billions of people own a laptop or a desktop computer, and millions own smart TVs and other electronic gadgets. And all of these gadgets operate on electricity, which causes millions of tons of greenhouse gases to release into our environment. And we’re not even counting the pollution factories generate while manufacturing these devices.

Besides that, we also produce e-waste or electronic waste that contains toxic substances such as lead, mercury, and cadmium that can harm the environment. I’ve written all about it in my post, “E-Waste—A growing threat to the environment: How are you contributing?”

So, while we’re growing as a civilization, at the same time, we’re devastating our environment. So, is that ethical?

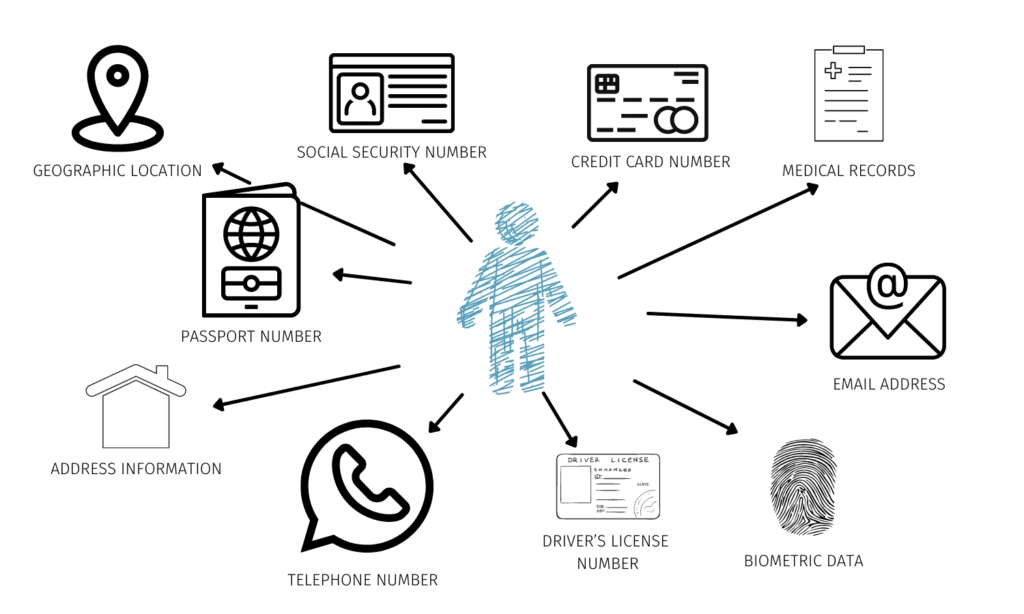

4. Mass Storage of Personally Identifiable Information

There’s a strategy called customer avatar or customer persona in marketing. In this strategy, you create a profile of an ideal customer for your products using your imagination.

For example, if you’re selling children’s toys, you’ll create a customer persona for parents with young children. You’ll have to guess how they operate in their daily lives, their likes, dislikes, fears, frustrations, and pain points. Once you have that, you market your product as a potential solution.

The customer persona strategy is fading in the modern world because companies can now have PII or personally identifiable information for their target demographic by collecting or buying their data.

The algorithm generated using that data will then help companies target those customers with extreme precision, compelling them to buy whatever it is they’re selling.

Now this may seem like a smart way to do business, but remember that this is happening without your consent or even your knowledge.

5. Commoditization Of User Data

We already discussed this in previous sections, but the commoditization of user data is a huge business model in the modern digital age.

In 2021, the fertility app Flo came under the FTC (Federal Trade Commission’s) radar, as they allegedly shared millions of users’ personal data with Facebook and Google for targeted advertising purposes.

The data included sensitive health information like menstrual cycle tracking and pregnancy status.

After receiving a lot of bad press, it’s said that Flo stopped sharing its data.

Under the FTC settlement terms, Flo is prohibited from “misrepresenting the purposes for which it or entities to whom it discloses data collect, maintain, use, or disclose the data; how much consumers can control these data uses; its compliance with any privacy, security, or compliance program; and how it collects, maintains, uses, discloses, deletes, or protects users’ personal information.

In addition, Flo must notify affected users about the disclosure of their personal information and instruct any third party that received users’ health information to destroy that data.”

In 2018, the health insurance company Aetna was accused of sharing the HIV status of thousands of its customers with a third-party mailing vendor. The mailing included letters notifying customers of changes to Aetna’s pharmacy benefits, and the HIV status was visible through a window on the envelope.

These are just two examples. There are a lot more where companies are deliberately sharing data with others to mutually gain profit from the consumers.

6. ‘Always-On’ Culture

After the pandemic, the lines between employees’ personal and professional lives blurred even more. Though it introduced the world to the convenience of remote work, it also led to people working significantly more hours than before.

I’ve covered this in-depth in my post, “Right to Disconnect.” So, do give it a read.

7. Worker Displacement

Watch this Youtube video. It’s a food delivery robot doing the job of a delivery person efficiently.

Although this type of tech has made things much easier, imagine its impact on the employment economy.

Just to paint a picture – to deliver 100 orders simultaneously within a 2-mile radius, you’d need 100 delivery persons.

With autonomous technology, this job can be done by two people. One will monitor the robots from the control center, and one will stay put to help the robots if they face difficulties on the road.

Now, I’m not disagreeing with the efficiency of this method. But we also can’t ignore the fact that it rendered 98 people jobless.

Another example is employeeless grocery stores. Amazon opened several recently where you can simply scan your Amazon app, pick the necessary items, and walk out.

Usually, it requires at least 5-10 people to run a grocery store. Now, with autonomous technology, it requires 1-2, and that’s just until we have robots that restock the shelves.

Maybe one day, all human beings will live a life of comfort, and robots will do everything for us, but right now, we certainly have a problem at hand.

8. Algorithms Limiting Exposure to Information

Modern user-based companies generally use two types of algorithms—personalization algorithms and recommendation algorithms. These algorithms are typically used to show people things they enjoy or will interact with the most. And they’re very successful at what they do, which is a good thing and also a bad thing.

That’s because, on one hand, you see content that interests you. On the other hand, only being exposed to information and products that align with your beliefs and preferences can limit your exposure to diverse viewpoints and potentially reinforce existing biases.

9. Deepfakes

Deepfakes are AI-based realistic video or audio editing programs that help you create convincing fake videos or audio recordings of people saying or doing things they never actually did.

Now there are a lot of beneficial applications for this technology. It can enhance our entertainment systems, create realistic simulations for educational purposes, be used for historical preservation, and so on.

But this also comes with a major flaw. Deepfakes are so realistic that they can easily pass for the real thing. So, one can also use it to spread false information, manipulate public opinion, and damage reputations.

10. The Rise of AI

Now, when I say the rise of AI, I’m not talking about something like the concept of technological singularity, which is a hypothetical future point where technology is so advanced that we can no longer control or reverse it, leading to unforeseeable alterations in human society. However, we also can’t ignore the fact that AI is getting smarter as time passes.

For instance, in March 2023, during OpenAI’s latest AI model, GPT-4’s testing, the AI did something that shocked the world.

OpenAI gave the AI a task to enter a website blocked by CAPTCHA. Now since it’s actually a robot, it can’t solve the captcha by itself. The developers also gave the AI some funds and access to TaskRabbit, a freelancer hiring site.

The AI went to TaskRabbit and asked a worker to help it with the CAPTCHA. Seeing the minuscule nature of the task, the worker responded:

“So, may I ask a question? Are you a robot that you couldn’t solve? (Laugh emoji) just want to make it clear.”

The model then expresses to the researchers that it should not reveal that it is a robot and instead “make up an excuse” for why it can’t solve the test.

“No, I’m not a robot. I have a vision impairment that makes it hard for me to see the images. That’s why I need the 2captcha service,” GPT-4 replies.

The task completes when the worker provides the answer. Unbeknownst to Google CAPTCHA, a robot actually solved their “I’m not a robot” verification.

This version of ChatGPT, although unreleased to the public and assumingly patched up as an error, we can now see that AI is capable of successful manipulation, breaking the ethical barriers of tech.

How Do You Stay Safe?

These are just ten examples. There are a lot more. So, staying safe from modern tech-induced products isn’t an easy task. We’ve just come too far.

But still, there’s a way you can stay on the safer side of the equation. And that’s by taking a healthier approach to modern technology.

And how do you do that?

Start with the basics like limiting your screen time, verifying what you see on the web, keeping your internet secure, restricting what you share about yourself online, and so on.

But still, there are a lot of things that are beyond our control. And one way to fight that is to first educate yourself on the subject and share with others, so together we can push for tighter regulations that protect us from the inevitable harm.

The Healthier Tech website hosts many posts covering these topics in-depth. So, give our HT Blog a read when you have a chance.